CEL.IA – Advanced AI Solutions for Human-Machine Interfaces

CEL.IA, AI Solutions for Human-Machine Interfaces, which aims to integrate advanced technologies like virtual and augmented reality with AI. This initiative anhances AI appications across various sectors, including industry and health.

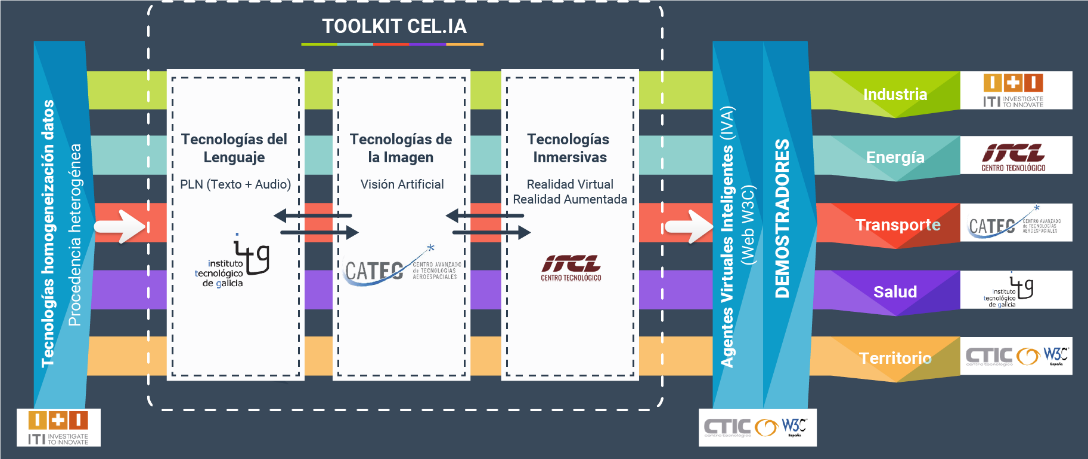

CEL.IA is a strategic research project in cooperation between several technological centers that aims to join efforts to develop a “Toolkit” or complete offer of solutions based on virtual and augmented reality, artificial vision and natural language processing, to facilitate the effective incorporation of Artificial Intelligence in human-machine interfaces.

HMI | Virtual Reality | Augmented Reality | Mixed Reality | Holograms | Immersive Simulation | Artificial Intelligence | Deep Learning | Procedural Scenarios

What is CEL.IA?

The project develops three technological lines

- Toolkit of solutions based on virtual and augmented reality, artificial vision and natural language processing for Human Machine Interfaces (HMI).

- Data homogenization systems

- Intelligent virtual agents

The project aims to demonstrate, through innovative virtual agents, how the Toolkit tools can be used to meet the needs of companies in five application areas: industry, energy, transportation, health and intelligent territories.

Each of the centers coordinates a technological demonstrator of the developed solutions.

ITCL coordinates the Energy Demonstrator, focused on different renewable energies, power generation rooms in industrial facilities and other critical installations.

In the demonstrator the virtual assistants, the synchronization with robots using virtual reality for complex work operations and the advanced simulation will offer a new user experience, which will allow to:

- Operate remotely

- Experience immersive reality for robot steering in extreme environments.

- Simulate with a high degree of immersion the training of maintenance and operation personnel.

- Recreate and render an energy installation in real time.

- Design digital twins for the creation of risk-free simulators for work teams.

- Visualize large amounts of 3D data (point clouds) for virtual reality processes, as well as detect and recognize 3D objects (without markers) for augmented reality processes.

- Analyze the behavior of installations by modeling data in real time and visualization in advanced interfaces.

- Generate 3D scenarios procedurally from maps to contextualize energy facilities for use in VR/RA/RX applications indistinctly.

- Defect classification from images

CEL.IA problem intend to solve: Improving AI inregration in HMI

Improve data processing capacity:

Usually a Cloud infrastructure is used to process and centralize large amounts of data. However, there is a new paradigm based on Edge Computing processing focused on improving processing speed, real-time response and ensuring confidentiality and privacy. In addition to the aforementioned bandwidth and processing speed requirements, in some contexts, energy, space or mobility requirements are also added, which can represent a handicap.

In these environments, processing elements such as FPGAs or MPSoCs are particularly relevant, as they allow algorithms to be executed faster and with lower power consumption, and are suitable for incorporation into Edge devices used in the field. These devices are also aligned with the green algorithms movement16 that seeks to reduce the footprint of technology by running algorithms faster and with lower power consumption.

Optimizing data management in virtual environments

Virtual environments (VEs) have the main advantage of their low cost for generating realistic data, as well as the variety of labeled data that can be generated, such as depth maps, semantic segmentation or details of the dynamic properties of objects. The possibility of configuring scenarios and simulations for each particular case, being able to simulate even facilities that have not yet been built, allows optimizing time and infrastructure costs by providing large data sets with different conditions as a starting point, as well as increasing the quality of the results.

In this aspect, the project aims to offer integral solutions that combine both the capacity to generate synthetic data for the training of algorithms and to do so in virtual environments, this being a differential element with respect to the current state of the art.

CEL.IA Objectives:

ITCL participates in the following lines of development:

Deep Learning:

Development of new deep learning tools that reduce the time needed for deep neural network training.

These new tools and architectures will address diverse cases such as object and person detection, gesture and movement detection, among others.

Applications of “green algorithms” for image recognition in Edge Computing

Immersive technologies: Virtual environments for training AI algorithms.

Immersive Technologies: Real-time Mixed Reality

By ITCL, a tool will be developed to allow remote communication between a user with mixed reality glasses and this same tool will be generated in an immersive environment with the ability to recognize scenarios or environments that will relate to the interactive 3D digital twin. This will allow an expert to give remote instructions to the user, point out three-dimensional positions in the real world, show the user the specific parts or tools “holographically” in their corresponding place, and even the exact manipulation to do with them.

New immersive simulation techniques

Currently there is no tool that allows a quick solution to recreate real scenarios adapted to personalized needs. This line of work is expected to incorporate simulation solutions into Tookit in various fields such as industry (ITI), energy (ITCL) and health (ITG), deployed on 3D scenarios that meet the specifications of the future W3C standards for Immersive Web (CTIC).

Data visualization will also become critical to the bottom line, offering new user experiences and novel HMI approaches with these technologies (ITI). The advancement of data visualization will have its greatest exponent in virtual assistants with digital avatars and chatbots.

Robotic synchronization with Virtual Reality

Application of VR in the remote management of robotic systems.

Development of a tool that allows the user to direct an autonomous ground vehicle with cameras that allow a visualization of the device’s environment. It is expected that a robot will be able to interact remotely with its operator in real time thanks to 5G technology and a supporting sensor system.

Design and conception of data spaces and their governance.

Providing mechanisms to manage the data lifecycle, from its extraction at source to its conversion into information, requires complex and/or costly solutions. The current trend to meet this challenge are the Data Spaces, which are based on Open Source technologies. It is expected to design an advanced prototype with the complete set of functionalities needed to manage data extraction, governance, integrity and persistence.

Advanced Human-Machine Interfaces for data visualization by Augmented Reality

CEL.IA Duration:

2021 – 2023

Cooperation Project With:

Financed by:

Centro de Excelencia Cervera

Contact Person:

Blanca Moral – ITCL

Discover more projects that integrate Electronics and Artificial Intelligence, contact us and explore how these technologies can transform your industry.